Why We Think We’re Right Even When We’re Wrong

Counteract the illusion of explanatory depth by asking this one question.

When children are little, they are in the business of asking questions. My kids were question machines. “Why is the sky blue?” “Can we get a pet snake?” “How does an airplane work?” “Do lions get mosquito bites?” “Why can’t dogs and cats have babies?” I was surprised to learn that on average preschoolers ask a hundred questions a day. I would have guessed at least five hundred. Other than having a clear cut response to the pet snake question (NO WAY), I didn’t have all the answers. Sometimes I thought I did but as soon as I began to explain, I quickly realized that I had no clue as to what I was talking about. “The sky is blue because…uh….the sun and visible light and maybe I need to look this up.” Thank goodness for Google.

The illusion of explanatory depth

Until you have tried to explain something to someone in detail, you might think you understand the topic better than you do. In a study conducted at Yale, participants were asked to rate their understanding of everyday objects including toilets and zippers. Of course, they believed that they knew how these objects operated. Duh. They use them every single day. Confidence reigned. That was until they were asked to write a detailed explanation of how these objects actually work. As it turns out, toilets and zippers are far more complicated than they appear. Cognitive scientists Steven Sloman and Philip Fernbach named this phenomenon the illusion of explanatory depth.

As this research highlights, we often think we have an in-depth understanding of a topic but when push comes to shove and we’re put on the spot to explain ourselves, our cluelessness becomes apparent. When it comes to toilets and zippers, not understanding how they work doesn’t really matter. Sure it would be nice to know and certainly helpful if you have a curious seven-year-old but in the big scheme of things, it’s not something to worry about.

Part of the reason we assume that we know more than we do is because we are cognitive misers, a term introduced by Susan Fiske and Shelley Taylor in 1984. Keep in mind that being a cognitive miser does not imply stinginess. Rather, it recognizes that in order to avoid information overload, we tend to skip over details and avoid deep thinking. For instance, we might read the headline of a news story or get the gist from X but don’t take time to read about the situation in full. We also rely on the expertise of others and sometimes the line between what they know and what we know blurs. This is of course problematic when it comes to our beliefs about politics. If the people we trust lack an in-depth understanding of the issues, so will we.

The illusion of adequate information

Vicarious knowledge isn’t knowledge at all. A study found that repeatedly watching people perform a skill on Youtube such as throwing darts or doing the moonwalk fosters the illusion of skill acquisition. The more participants watched videos of others performing moonwalking and throwing darts, the more they believed that they could perform it too. Despite their predictions that their skills had vastly improved from watching the videos, they hadn’t improved one iota. I had a friend who thought he was ready to hit the course after watching a few hours of golf on television. I was sure I could do my own makeup after watching a few Huda Kattan tutorials. It did not go well. Unfortunately, mastery is not an armchair sport. If we want to get better at something, we have to practice it in real life.

Overconfidence in ability often goes hand in hand with under competence in performance. Contributing to this problem is the flawed assumption that we have all the skills or information necessary to do the job or make a decision. The truth is that most of the time, we don’t even consider that there might be a different way to think about or approach a situation. This is known as the illusion of adequate information. In a study, participants were divided into three groups who were told about a school that lacked adequate water. The first group read an article that argued the school should merge with another school that had adequate water. The second group read an article that argued against a merger. The third group–the control–read both articles. The two groups who read only half the story–either for or against a merger–were convinced they had enough information to make an informed decision. Even though they didn’t have all the information, they were confident that they did. Notably, they were more sure of themselves than the people who had read both sides of the story. Alas, just because we have strong feelings about something does not mean that we actually understand it. Feelings aren’t facts.

How does that work, exactly?

Based on a few tidbits of information, most of us think we are in the know and this can lead to a host of problems including political polarization and also conflicts in our personal relationships. Perhaps when we disagree with someone, our first move should be to ask ourselves, “Is there something I am missing here?” On her awesome podcast Choiceology, Katy Milkman recently interviewed Steven Sloman of Brown University about how best to puncture the illusion of thinking we understand something we don’t fully understand. He suggests posing a simple question: “How does that work, exactly?” Asking someone to explain something invites curiosity and reflection. As he explained on the podcast:

“When you're asked to explain, you have to talk about something outside of your own brain. You're not talking about your opinion. You're not talking about why you have the position you have on this policy. Rather you're talking about the policy itself and what its consequences in the world will be. And I believe that externalizing things sort of separates them from you, so you're thinking about them mechanistically, but you also don't have the same investment in being right. So, I think it's a way to achieve compromise.”

The idea is not to humiliate someone, it is to invite humility.

I cannot help but think that whenever we have strong feelings about something, it is worth asking “How does that work, exactly?” to others and ourselves. At a time when reactions are often outsized, it just might help put things in perspective and help us recognize that we don’t have all the answers.

If you buy one thing today, make it this…

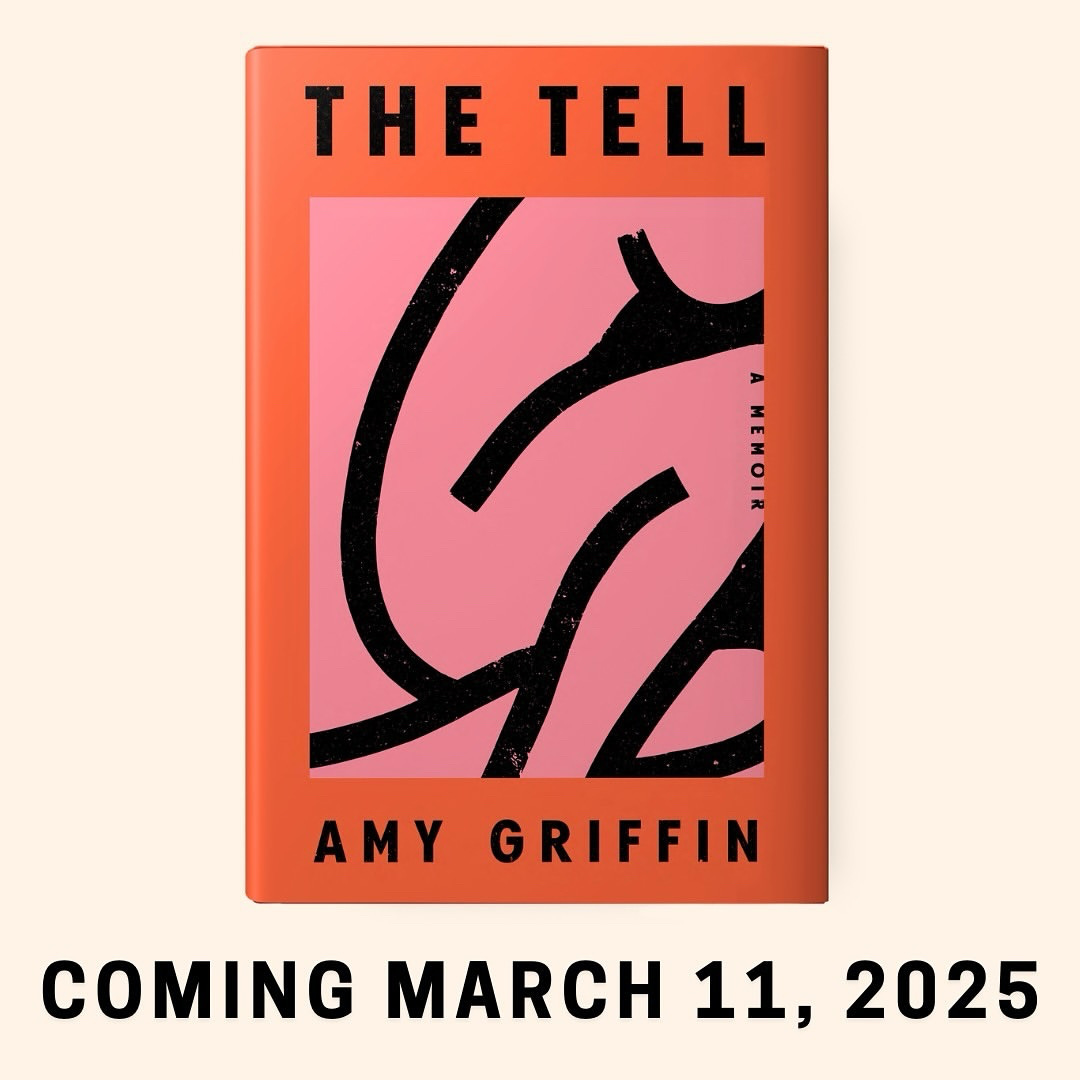

Amy Griffin is one of the bravest women I know. Her memoir will inspire women everywhere to understand how secrets can hold us back and more importantly how to live each day to the fullest. Counting the days until March 11th!

I pre-ordered my copy of The Tell, you’ll be glad you did too.

What a path to discovery and perhaps unity-thank you

Looking forward to reading reading this book. I'll be purchasing from an independent bookstore.